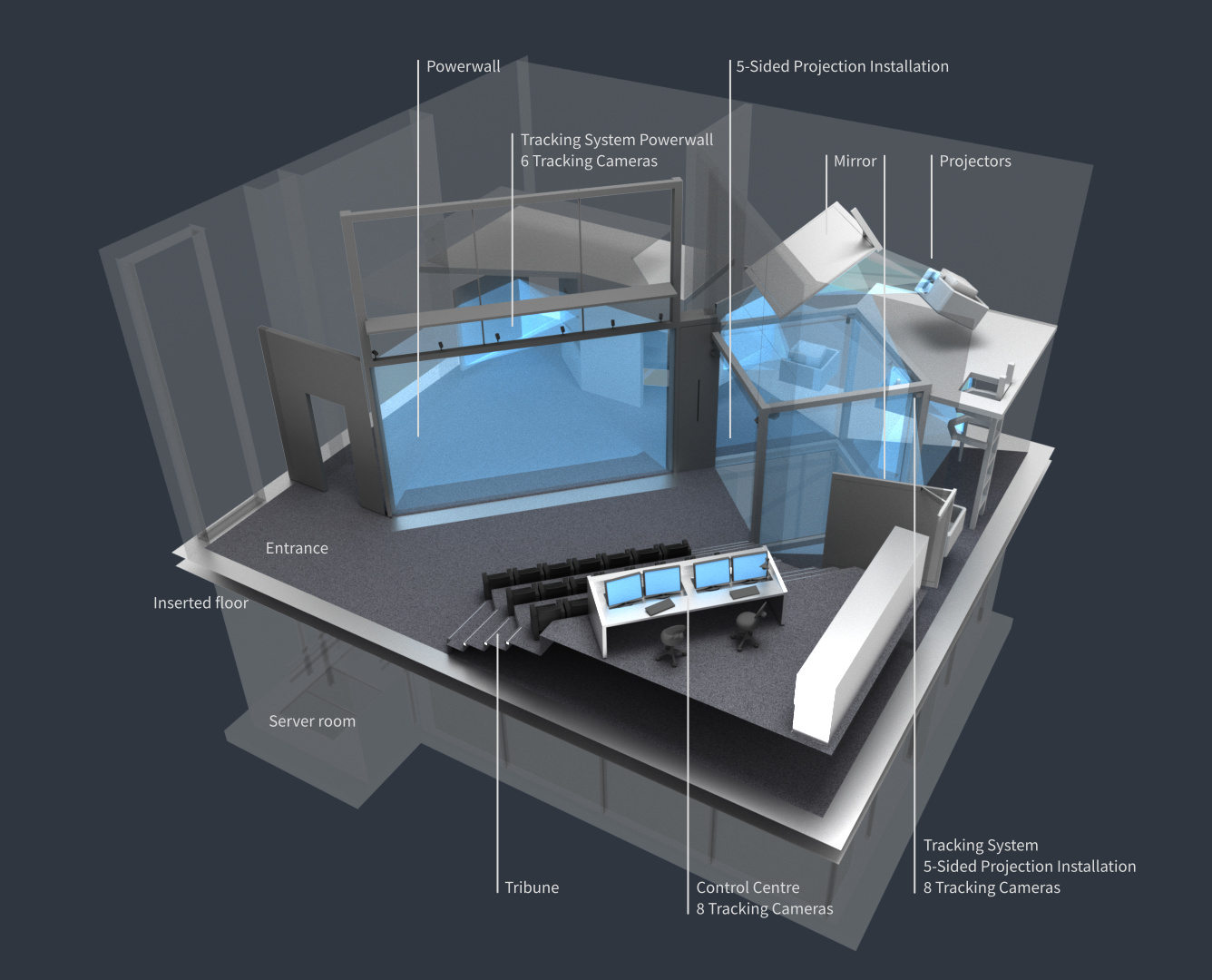

Last year, Magnum was used to introduce students to virtual reality programming at the Ludwig-Maximilians-Universität München — powering a CAVE-like environment, a room-scale five-sided projection installation.

Teaching about virtual reality always benefits from practical experience. For that, a big part of the lecture Virtual Reality, hosted at the Ludwig-Maximilians-Universität München, open for students of both Munich Universities, is to get hands on into their own VR project. This project has to run on the facilities of the Virtual Reality and Visualisation Centre (V2C) of the Leibniz Supercomputing Centre (Leibniz-Rechenzentrum, LRZ) of the Bavarian Academy of Science and Humanities.1 Here, students use the following five-sided projection installation for their own project.

Having past experience with OpenSG, last year we decided to use Magnum as the graphics engine for the students to start with — and we will stick to it for this year as well. We were lucky to get Vladimír to visit us in Munich and give an introductory lecture on how to use Magnum. In this lecture, the students got a general overview of how Magnum is structured and learned how to create their own rendering application using the scene graph, the model loading features and the input event system.

Although this yielded a great starting point, our five-sided projection installation requires that you code for a setup that consists of 10 nodes that render and one node that works as a master node. For that, we provide our own small library that helps to keep one application on those 10 nodes in sync.

The application must be built as two executables — one for rendering, which runs on the render nodes, and one that runs on the master node. We have for each a distinct class with a different set of features. The master node works as a synchronization server. That means data is distributed from here to each of the render nodes. This data can be tracking data, transformations from interactions or physic simulation as well as all other kinds of inputs or dynamics. For the render nodes, the master node also functions as a synchronization point for buffer swaps. This means that after all rendering is finished each node sends a request to the master node and waits until it gets a response. The master node itself does only respond if all pre-determined nodes have sent a request. This way we can be certain that each node is rendering the same scene with the same input data.

The synchronization library itself is based on

Boost.Asio

which provides an abstraction layer to UDP and TCP networking. Based on

this we further implemented so called synchObjects that can hold a fixed

size generic data type. Those synchObjects allow to set data on the

master node and to synchronize them with all the pre-defined render nodes using

udp multicast.

In hindsight, the biggest challenge for us was to provide the students with a working starting package, comprised of a Magnum example that works on our installations but also gives the students the possibility to develop on their own computers, which might use Windows, a Linux derivate or macOS. For this, it really helped that Magnum is in Homebrew, allows you to use Vcpkg or to build your own Ubuntu package.

Our students could easily start creating their own projects using Magnum. The sheer amount of possibilities and the size of documentation was both an advantage and a challenge. A small example for this might be getting the rotation matrix of a transformation — while one might think using the function rotation() as in

Matrix4 transformationMatrix; Matrix3x3 rotMatrix = transformationMatrix.rotation();

is the obvious choice, consulting the documentation gives you the note that this function asserts that the transformation is not skewed in any way and so you might rather consider using the function rotationScaling() as in

Matrix4 transformationMatrix; Matrix3x3 rotMatrix = transformationMatrix.rotationScaling();

However, this also teaches a valuable lesson — read the documentation!

What our students really liked were all the debugging functionalities,

especially the Debug class that supports using

Debug{} << myData; for almost any type. Furthermore, having the

Primitives library, the built-in Shaders as well as the

GL abstraction layer that does most of the tedious work for you allowed

our students to quickly develop their own applications and try out different

approaches.

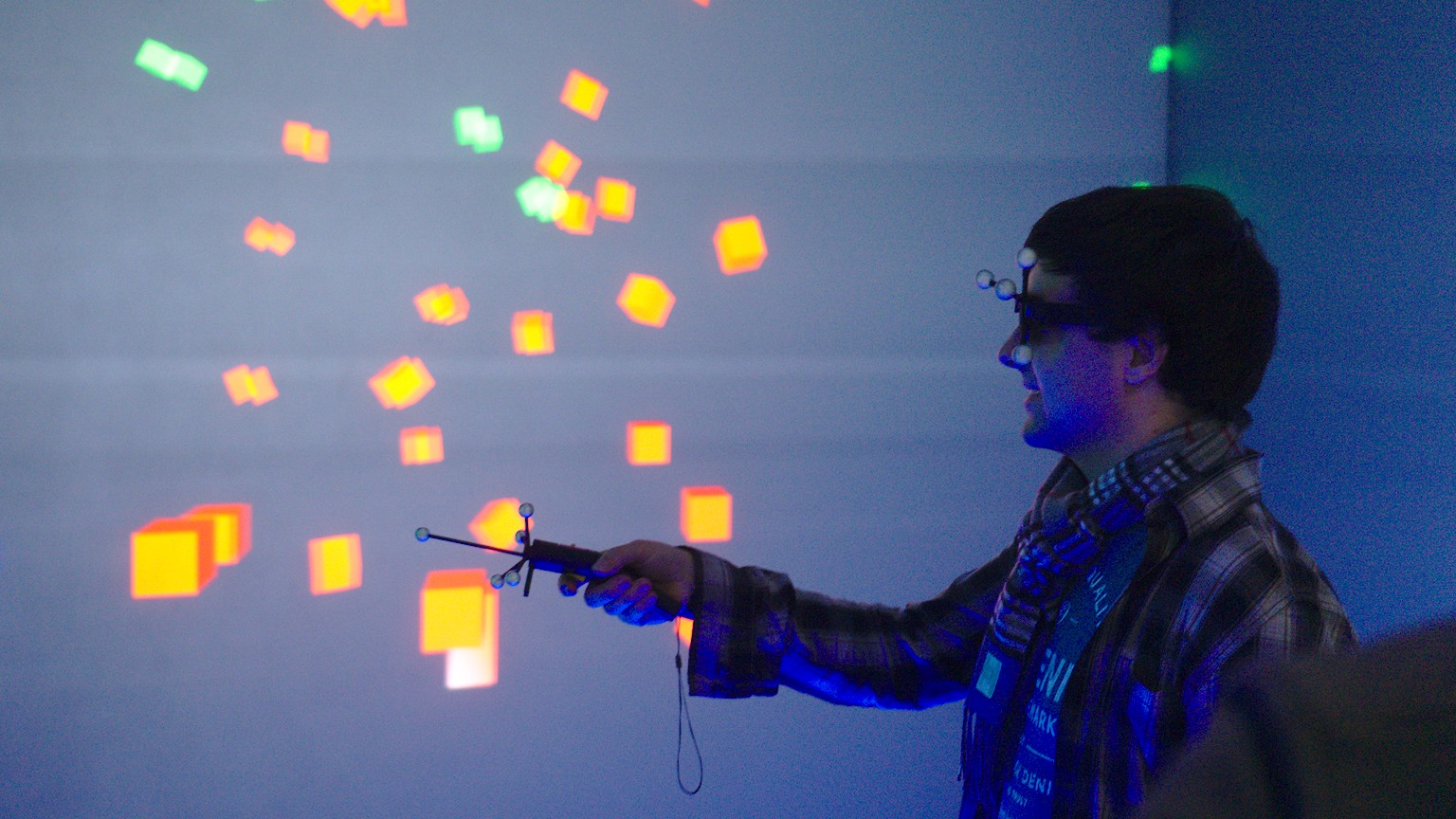

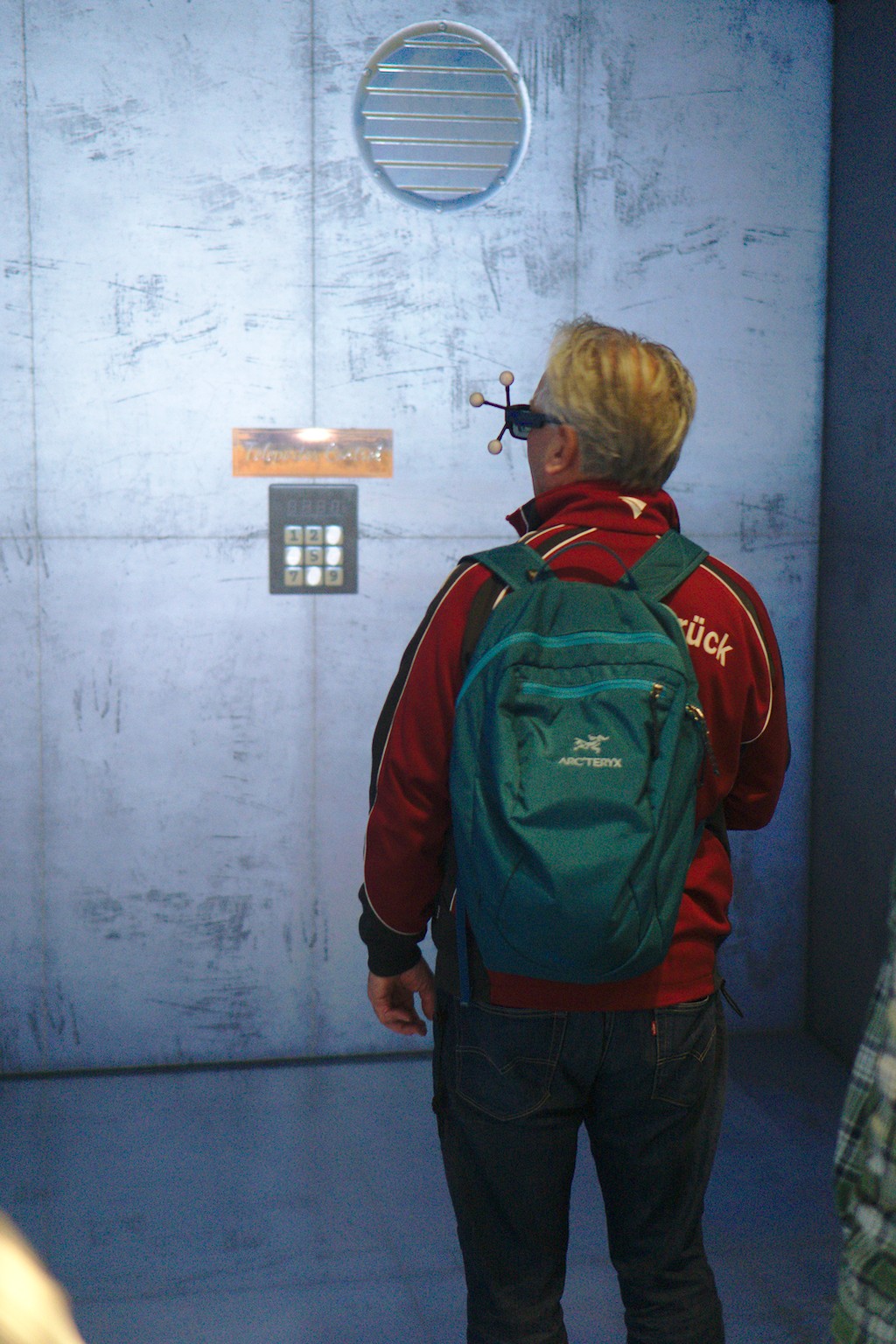

Overall, we are very excited about all the different projects our students developed with Magnum. The photos presented above are from our Open Lab Day in December 2018 where our students presented their work to family, friends and the public.

- 1.

- ^ The V2C at LRZ offers modern technologies for visualising scientific data. This allows for a more rapid advancement and significant enrichment of scientific knowledge. The ability of scientists to understand their data and discover new interrelations in them is vastly improved by the three dimensional, high-resolution data projection on the Powerwall, by the use of the five-sided projection installation, and by the interactive navigation provided in the V2C leading to breakthroughs in understanding and interpreting results. In addition to being used in the natural sciences and in technology, complex datasets in humanities and social science research are also visualised using the V2C, for instance in the fields of arts and multimedia, archaeology, and architecture. A new LED-based Powerwall extends the V2C capabilities with the latest technology.

About the author

Markus Wiedemann is a scientist working with, lecturer teaching about and enthusiast addicted to Virtual Reality. He is currently with the Virtual Reality and Visualisation Centre of the Leibniz Supercomputing Centre, lecturer at the Ludwig-Maximilians-Universität München and member of the Munich Network Management Team.

Guest Posts

This is a guest post by an external author. Do you also have something interesting to say? A success story worth sharing? We’ll be happy to publish it. See the introductory post for details.